In the last few years there have been many notable deepfake incidents that have amazed and amused the internet. The technology behind deepfakes offers many interesting possibilities for various creative sectors, from dubbing and repairing video to solving the uncanny valley effect of CG characters in films and video games, avoiding actors having to repeat a fluffed line and the creation of apps that allow us to try new clothes and hairstyles.

The technology is even being used to produce corporate training videos and train doctors. However, there remains a prevailing fear that the technology could be used for sinister ends. If you’d like to delve deeper into these concerns, check out our piece on the ethics of digital humans.

Deepfake Threats

Deepfakes, a novel form of threat falling within the broader category of synthetic media, employ artificial intelligence and machine learning to craft convincing videos, images, audio, and text portraying events that never occurred. While certain applications of synthetic media serve harmless entertainment purposes, others pose inherent risks.

The danger of deepfakes and synthetic media doesn’t solely stem from the technology itself but rather from the human tendency to trust visual information. Consequently, deepfakes don’t necessarily need to be highly advanced to effectively disseminate misinformation.

Interviews with industry experts reveal varying perspectives on the severity and immediacy of the synthetic media threat, ranging from urgent concern to a more measured preparedness approach.

To elucidate potential deepfake threats, we’ve examined scenarios in commerce, society, and national security. The likelihood of these scenarios unfolding and succeeding may rise as the cost and resources required to create usable deepfakes decrease, paralleling the historical trend in synthetic media evolution.

Given the multifaceted nature of the issue, there’s no singular solution. A comprehensive approach should involve technological innovation, education, and regulation as integral components of detection and mitigation strategies. Successful efforts will necessitate extensive collaboration between private and public stakeholders to overcome existing challenges and safeguard against emerging threats while upholding civil liberties.

Deepfake Incidents

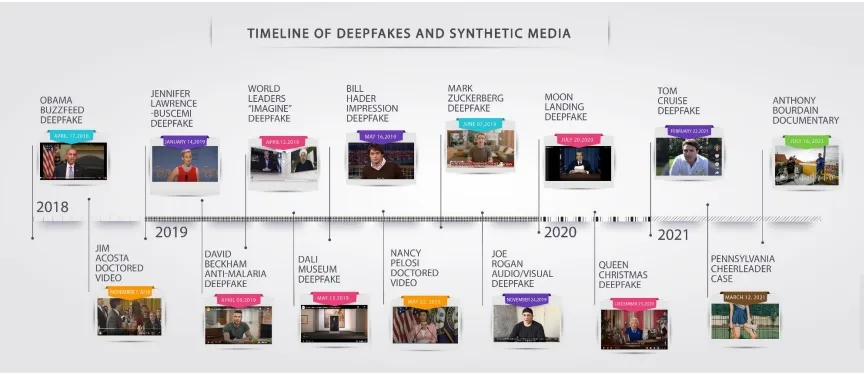

Since the first deepfake in 2017, there have been many developments in deepfake incidents and related-synthetic media technologies. The timeline below provides a listing of some of the most well-known and representative examples of deepfakes, as well as some “cheapfakes” and one example of an instance in which deepfakes were initially implicated, but never proven to have been used. An addendum to this report, which provides summaries of these examples and links for further information is also available.

- Deepfake of Political Figures (2018): this Deepfake incident technology was first widely recognized when fake videos of political figures, including Barack Obama and Vladimir Putin, surfaced online. These videos were created using AI to manipulate existing speeches and interviews.

- Deepfake Voice Scam (2019): Criminals used deepfake technology to mimic a CEO’s voice in a fraudulent attempt to transfer funds. The scammer impersonated the executive and directed an employee to transfer a significant amount of money.

- Deepfake Pornography (2017-2019): Deepfake technology has been extensively used to create non-consensual explicit content by superimposing the faces of celebrities or individuals onto adult videos. This raised concerns about privacy and consent.

- Deepfake of Facebook CEO (2019): A deepfake video of Mark Zuckerberg was created to demonstrate the potential misuse of AI-generated content. The video showed Zuckerberg discussing Facebook’s control over users’ data.

- Deepfake in Indian Politics (2019): Deepfake videos emerged in Indian politics, where political figures’ faces were manipulated to appear in compromising situations. This raised concerns about the use of deepfakes for political misinformation.

- Deepfake in Corporate Sabotage (2020): Reports surfaced about deepfake technology being used for corporate espionage. Attackers used AI-generated audio to impersonate a CEO, attempting to deceive employees and gain unauthorized access.

- Deepfake of Tom Cruise on TikTok (2021): A series of deepfake videos on TikTok featured a remarkably realistic impersonation of actor Tom Cruise. The videos demonstrated the potential for creating highly convincing and misleading content.

- Deepfake Audio in Fraud (2019): Criminals used deepfake audio to mimic the voice of a company executive and authorize fraudulent transactions. The AI-generated voice convincingly imitated the executive’s speech patterns and intonations.

- Deepfake in Misinformation (2020): Deepfake videos have been used to spread misinformation, such as altering speeches or interviews to create false narratives. This raises concerns about the potential impact on public discourse and trust.

- Deepfake of Journalists (2020): Deepfake technology has been used to create fake videos or audio recordings of journalists, potentially damaging their credibility and spreading false information.

More than just “deepfakes” – “Synthetic Media” and Disinformation

Deepfakes actually represent a subset of the general category of “synthetic media” or “synthetic content.” Many popular articles on the subject78 define synthetic media as any media which has been created or modified through the use of artificial intelligence/machine learning (AI/ML), especially if done in an automated fashion. From a practical standpoint, however, within the law enforcement and intelligence communities, synthetic media is generally defined to encompass all media which has either been created through digital or artificial means (think computer-generated people) or media which has been modified or otherwise manipulated through the use of technology, whether analog or digital.

For example, physical audio tape can be manually cut and spliced to remove words or sentences and alter the overall meaning of a recording’s content. “Cheapfakes” are another version of synthetic media in which simple digital techniques are applied to content to alter the observer’s perception of an event.

Science and technology are constantly advancing. Deepfakes, along with automated content creation and modification techniques, merely represent the latest mechanisms developed to alter or create visual, audio, and text content. The key difference they represent, however, is the ease with which they can be made – and made well. In the past, casual viewers (or listeners) could easily detect fraudulent content. This may no longer always be the case and may allow any adversary interested in sowing misinformation or disinformation to leverage far more realistic image, video, audio, and text content in their campaigns than ever before.

Most organizations simply aren’t prepared to address these types of threats. Back in 2021, Gartner analyst Darin Stewart wrote a blog post warning that “while companies are scrambling to defend against ransomware attacks, they are doing nothing to prepare for an imminent onslaught of synthetic media.”

With AI rapidly advancing, and providers like OpenAI democratizing access to AI and machine learning via new tools like ChatGPT, organizations can’t afford to ignore the social engineering threat posed by deepfakes. If they do, they will leave themselves vulnerable to data breaches.